A Gestural Interface to Virtual Environments

System Overview

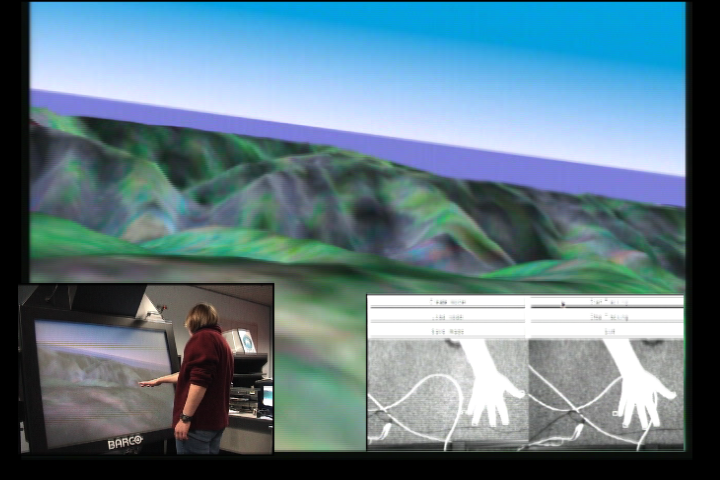

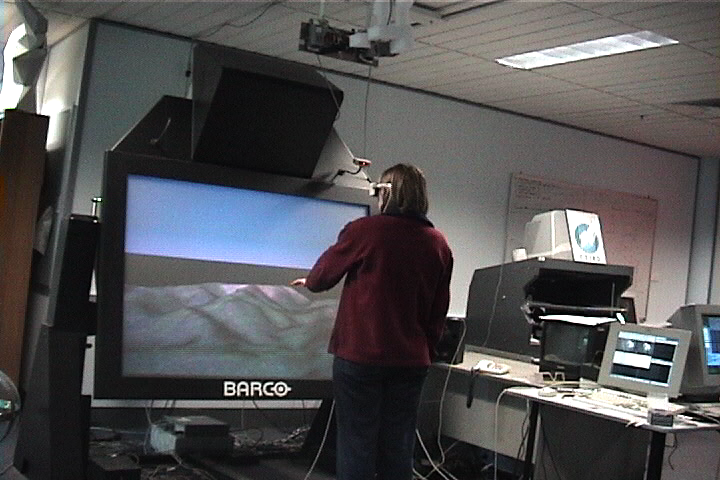

The gesture interface system was developed as an interface to virtual environments and has been used to control navigation and manipulation of 3D objects. The system is used in conjunction with the joint CSIRO/ANU Virtual Environments lab. The environment consists of a Barco Baron projection table for 3D graphics display with CrystalEyes stereo shutter glasses for stereoscopic viewing. The environment is powered by an SGI Onyx2. Polhemus FastTrak sensors and stylus are available for non-gestural input.

Robust & Real-Time Tracking in 3D

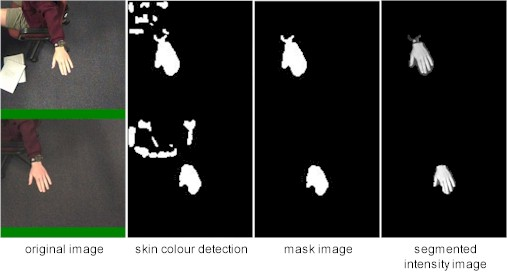

Skin Colour Detection

Classification

Applications

Navigation Control - Terrain Flythrough

A common task in 3D manipulation is user control of the viewpoint within a scene or virtual world. The user should be able to move easily through the scene. As a demonstration of the use of gesture to control the user's viewpoint, we constructed a terrain flythrough. The user controls their direction within the flythrough by tilting the hand as in the image below. Forward and backward motion is controlled via the location of the user's hand in space - moving the hand forward to move forwards, and back to move backwards.3D Object Manipulation - Blocks

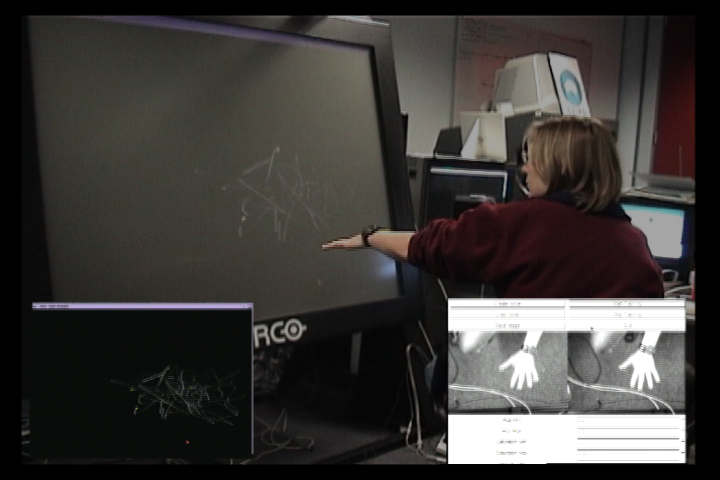

Along with viewpoint control, object manipulation is a fundamental interaction requirement in 3D virtual environments. Object manipulations include selection, translation, rotation and scaling.Multidimensional Input - Sound Space Exploration

An advantage of gesture over other 3D trackers is the ability to provide multidimensional input. While a Polhemus Stylus or similar device provides position and orientation information for a single point in space (the stylus tip), a gesture interface can input many positions simultaneously since the system tracks multiple features. To demonstrate this ability, we developed "HandSynth" - a tool for exploring multi-dimensional sound synthesis algorithms. In HandSynth, the position of each fingertip is tied to different parameters within the sound generator. Moving the hand about in space and changing its orientation generates a variety of different sounds synthesised by changing up to 15 parameters at the same time. We used the HandSynth to simultaneously control 5 fm-synths each with 3 parameters - carrier frequency, modulation frequency and modulation depth. Exploring the sound space is much quicker and easier than the conventional approach which involves twiddling individual knobs and sliders for many tedious hours to understand the complex perceptual interactions between parameters. The HandSynth was also used as an interface for non-linear navigation in a 3 minute long sampled sound file. Movement from left to right was a fast forward or rewind. Movement front-back provided normal playback speed for the current position. Up and down allowed slow motion forward and back. This interface allows the user to quickly hear an overview with left-right hand movement, to zoom in on detail and to also have random access into the file based on position of the hand movements. The synthesis and navigation were combined to create a compositional tool in which 3 parameters of reverb and flanging effects were controlled by the spatial position of two fingertips while the other 3 fingertips accessed samples from the soundfile to be processed through the effects. Finally we also used the sounds from the HandSynth as input to a music visualisation based on a flock of 'boid' artificial lifeforms that respond to different frequencies in the sound. The motion of the boids is displayed graphically providing a visual display of auditory information. With HandSynth providing the audio input, the boids now respond to the user's hand movements.

Publications

R. O'Hagan and A. Zelinsky, "Vision-based Gesture Interfaces to Virtual Environments", 1st Australasian User Interfaces Conference (AUIC2000) Proceedings, Canberra, Australia, pp 73-80. January 2000.

R. O'Hagan and A. Zelinsky,"Finger

Track - A Robust and Real-Time Gesture Interface", Advanced

Topics in Artificial Intelligence, Tenth Australian Joint Conference on

Artificial Intelligence (AI'97) Proceedings, Perth, Australia, pp475-484.

December 1997.

Date Last Modified: Sunday, 8th Jul 2001