|

|

|

Quick Overview

|

|

GPU's higher processing power compared to a standard CPU comes

at the cost of reduced data caching and flow control logic as

more transistors have to be devoted to data processing. This

imposes certain limitations in terms of how an application

may access memory and implement flow control. As a result,

implementation of certain algorithms (even trivial ones) on the

GPU may be difficult or may not be computationally justified.

|

|

Histogram has been traditionally difficult to compute efficiently

on the GPU. Lack of an efficient histogram method

on the GPU, often requires the programmer to move the data

back from the device (GPU) memory to the host (CPU), resulting

in costly data transfers and reduced efficiency. A simple

histogram computation can indeed become the bottleneck of

an otherwise efficient application.

|

Download the Code

|

You can find the source code for two efficient histogram computation

methods for CUDA compatible GPUs here.

The methods are described in the following publications:

"Efficient histogram algorithms for NVIDIA CUDA compatible devices"

and "Speeding up mutual information computation using NVIDIA CUDA hardware".

|

|

|

|

|

Quick Overview

|

|

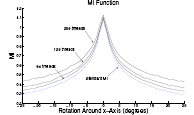

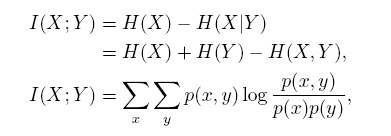

Mutual information of two random variables is the amount of

information that each carries about the other and is defined as

where H(X|Y) is the information content of random variable X if

Y is known, H(X,Y) is the joint entropy of the two random

variables and is a measure of combined information of the two random

variables. I(X;Y) can be thought of as the reduction in

uncertainty of random variable X as a result of knowing Y. The

uncertainty is maximally reduced, when there is a one-to-one mapping

between the two random variables and is not reduced at all if the

two random variables are independent and do not provide any

information about one another.

The concept of mutual information has its origins in information theory and

is widely used in other disciplines. In medical image analysis, mutual information

is used as a similarity measure for multi-modal registration.

|

Download the Code

|

You can find the source code for efficient MI computation

methods for CUDA compatible GPUs here.

The methods are described in the following publication:

"Speeding up mutual information computation using NVIDIA CUDA hardware".

|

|

|