|

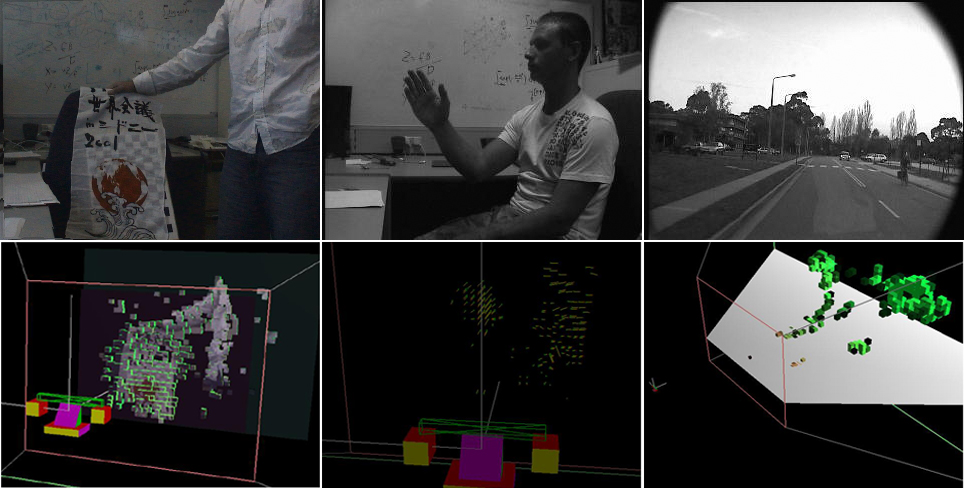

Because many useful algorithms have been developed for static stereo vision, one of the first milestones in the development of CeDAR's vision processing systems was the ability to use any static stereo algorithms on the active platform. To deal with this, a real-time active rectification technique was developed that gives the relationship between successive frames and left and right frames such that global mosaics of the scene can be constructed (REF 4). Then, any static algorithm can be converted to the active case simply by operating on the global mosaics.

|

|

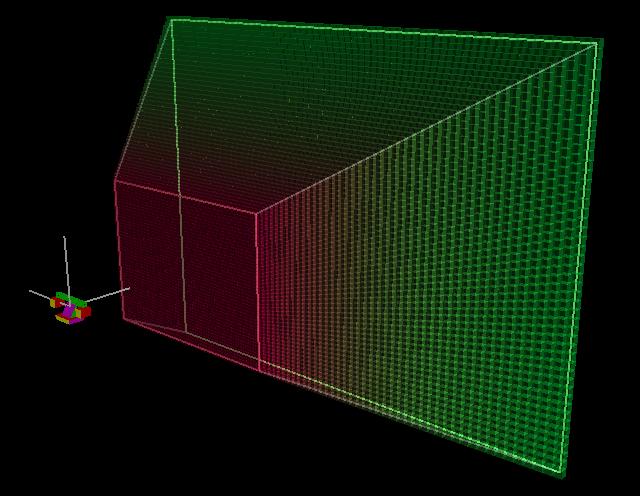

The next stage of development involved developing a 3D perception of where visual surfaces and free space are located in real dynamic scenes, and how these surfaces are moving, regardless of any deliberate motions of the viewing apparatus and variations in gaze fixation point. A Bayesian Occupancy grid approacch was developed to incorporate spatio-temporal information (REF 4).

|

|

Spatial and flow perceptions are peripheral responses, that is, they operate continually, over the entire visual field, regardless of the geometry of the active platform.

|

|

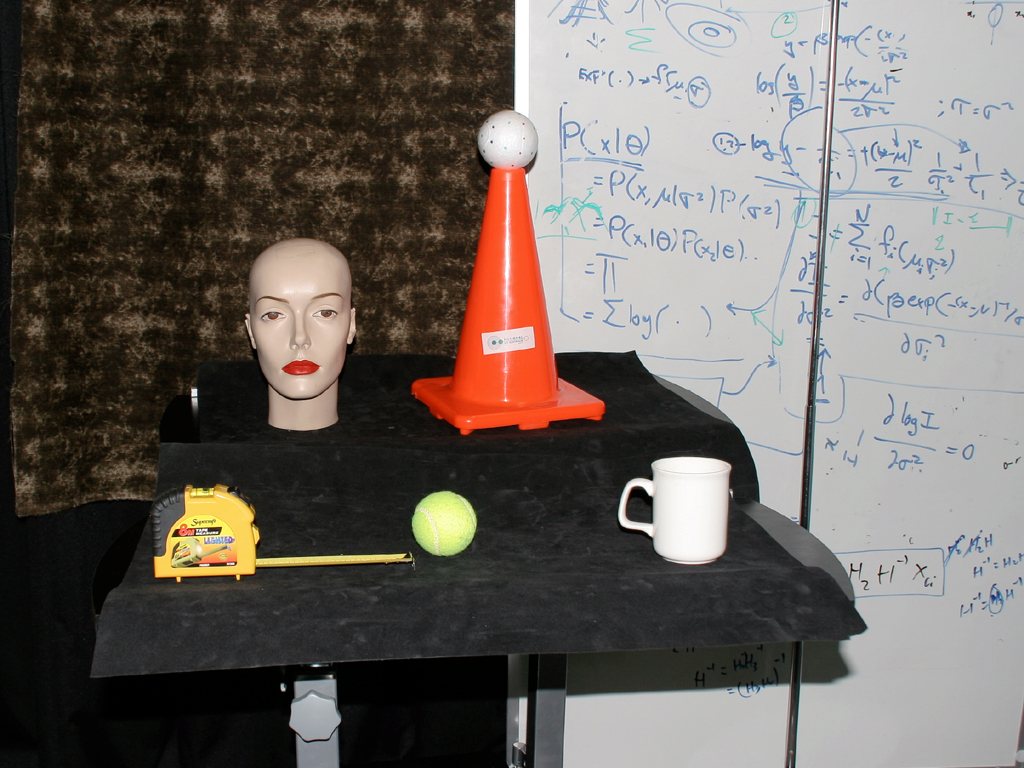

Now, CeDAR can cope with moving cameras, and has a perceprion of where free space and visual surfaces are in the scene. The next stage was to develop coordinated stereo fixation. Humans find it hard to fixate on free space, they are more interested in attending visual surfaces. Accordingly, a robust Markov Random Field Zero Disparity Filter (MRF ZDF, REF 7) was developed to synthesize this property of the primate vision system. This is a foveal response, and permits CeDAR to focus on, track, and segment out objects of interest in the scene.

|

|

Most recently, CeDAR has learnt to decide where to look all by itself. A saliency map is calculated that highlights the most interesting things in the scene. The approach incorporates much biological inspiration, such as the concept of active-dynamic Inhibition of Return (IOR - once we have considered an object, we are less likely to consider it again, at least in the short term - even if it moves), and a Task-Dependant Spatial Bias (TSB - biasing gaze fixation to help whatever task we are currently doing) (REF 8).

|

|

As a whole, the system can understand where free space and visual surfaces are in dynamic scenes while simultaneously saccading between regions and objects of interest, and maintaining its gaze upon those regions as it sees fit, regardless of their motion (it also keeps a short-term memory of where such regions are, and their motion), and while identifying which pixels in its view are on the object of interest, and which are not. Vision processing occurs on a small network of computers. Cues maps that are known to exist in the human brain (via neural correlates) are implemented on servers in the processing network. The vision processing network has been shown to exhibit similarity with the processing centres in the primate early visual brain.

|