Many existing reinforcement learning algorithms treat continuous variables, like speed, by discretising them into a few levels (for example slow, medium, fast). Discretising does not allow smooth control.

The Robotic Systems Laboratory has developed an algorithm that

allows reinforcement learning with continuous variables and tested the

algorithm on real robots with real vision Systems.

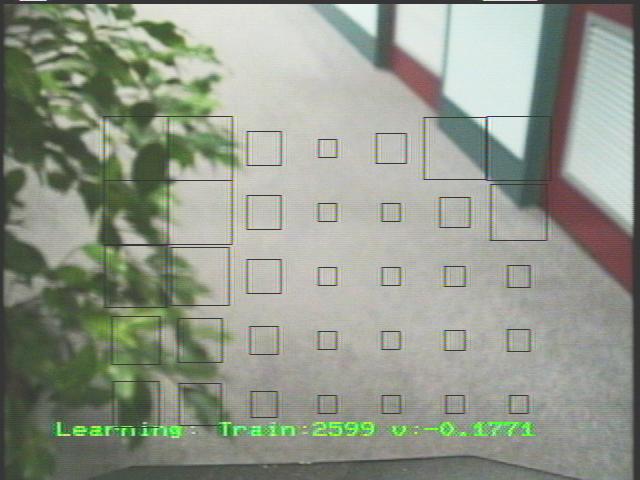

Learned wandering for a Nomad 200 mobile robot:

"Reinforcement Learning

for a Vision Based Mobile Robot," by C. Gaskett, L. Fletcher, and

A. Zelinsky, in Proceedings of IEEE/RSJ International Conference on Intelligent

Robots and Systems (IROS2000) © IEEE, (Takamatsu, Japan, October 2000).

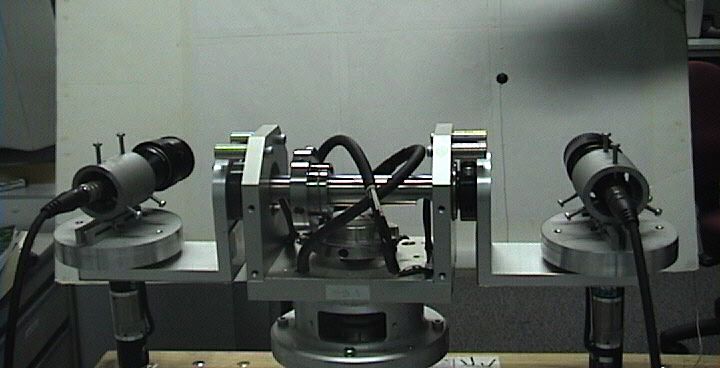

Learned smooth fixation movement for the HyDrA active vision head (unpublished):

see also: A survey of continuous state and action Q-learning approaches:

"Q-Learning in Continuous

State and Action Spaces," by C. Gaskett, D. Wettergreen, and A.

Zelinsky, in Proceedings of the 12th Australian Joint Conference on Artificial

Intelligence © Springer-Verlag,

(Sydney, Australia, December 1999).

Other publications: Chris Gaskett's homepage